Here is a fun experiment to do: Find a room in your house or apartment that only has one window. Seal off the room so that it is as dark as possible. Next, punch a tiny pinhole into a large sheet of cardboard, and affix it to the window.

If done correctly, the rays of light filtered through the pinhole in the cardboard will strike the opposite wall and produce an inverted mirror-image of the scene outside. Congratulations, you have just created the most basic camera possible!

The previously described set up is sometimes called a camera obscura or a pinhole camera. It is one of a number of naturally occurring optical phenomena that vision scientists Antonio Torralba and Bill Freeman have dubbed “accidental cameras” (or natural cameras)—natural optical mechanisms that produce an image of the surrounding environment. As it turns out, there are quite a number of natural cameras in that reflect visual information that our eyes fail to pick up. Houseplants, wall corners, and other common objects reflect visual information all around us. The problem is that the visual information is usually too dim or noisy to be parsed with the naked eye. But advances in computer vision technology may soon be able to bring this hidden world of accidental cameras to light. Torralba and Freeman have made waves in the scientific community by investigating the nature of and highlighting applications for these accidental cameras.

Using Computers To See Things

Fans of the 1984 film Blade Runner might recall the infamous “enhance scene”, in which Harrison Ford’s character Rick Deckard analyzes a photograph of a crime scene with his high tech computer. By instructing the computer to analyze the light reflecting off of the mirror in the photo, Decker’s computer is able to construct an image of the contents of an adjacent room; an image that was not in the initial photograph. At the time, existing camera technology rendered such a process as completely speculative sci-fi. Modern computer vision technology, though, might just be able to take that scene out of the realm of fiction and place it squarely into the realm of reality.

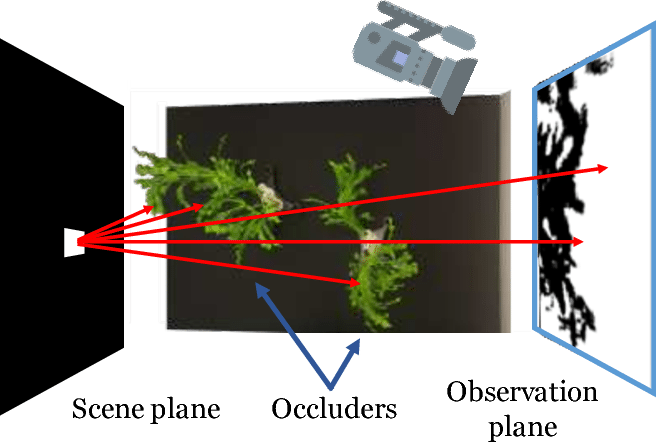

For example, Torralba and Freeman co-authored a study this past summer, in which the team showed that they could construct a 3-dimensional model of a room simply from analyzing the patterns of shadows cast by a houseplant in that room. Light that is refracted by the houseplant casts 2-dimensional shadows on an adjacent wall, and by analyzing the fluctuations and changes of those patterns of shadows, scientists can extrapolate the shape, size, and layout of things in the room. Initially, the leave of the houseplant blocks out certain spots of light on the wall. As the leaves move, light in the room is diffracted differently and the intensity of the light on the wall changes. By subtracting the data in the first image from the data in the second image, one can pull out the hidden images produced by the houseplant.

A schematic representing how a houseplant can be used as a natural camera. Source: Baradad, M; et. al. (2018) “Inferring Light Fields from Shadows.” IEEE Explore. <http://openaccess.thecvf.com/content_cvpr_2018/papers/Baradad_Inferring_Light_Fields_CVPR_2018_paper.pdf>

Similarly, a related 2014 study spearheaded by Freeman showed that scientists are able to reconstruct audio messages from examining the minuscule motions from everyday objects. By using a high-speed camera to detect the minute vibrations in an empty bag of chips, the team was able to reconstruct the audio of a conversation that was occurring in a separate room. Incidentally, part of the recording was of “Mary Had a Little Lamb”, the first song to ever be recorded on a phonograph by Thomas Edison in 1877. Torralba and Freeman were also involved in a 2012 study that showed that by examining the shadows cast around the corner of walls, they could construct the hidden scene occurring on the opposite side of the wall.

To be clear, simply using computers to help us image things is not new. Things like MRIs and scanning electron microscopes use computer imaging to render a picture from information we cannot detect with the naked eye. However, most of these computer imaging techniques rely on direct interaction with the object they are supposed to be imaging. Scanning electron microscopes bounce electrons off of the structure they are supposed to be imaging and determine the image based on how the electrons are reflected back. In contrast, the kind of “non-line-of-sight imaging” investigated by Torralba and Freeman does not use direct interaction with a system to construct an image. Rather, the computer can extrapolate and compute based on the reflection and refraction of ambient light in the environment. For a simpler analogy, the difference is similar to the difference between directly taking a picture of a fire and constructing an image of the fire from information in the smoke the fire produces. The first method requires direct means and is relatively straightforward. The second method is much more indirect and requires some complex computation.

Of course, technologies exploiting hidden cameras have a number of applications. Apart from military and spy applications, non-line-of-sight imaging tech could be used in self-driving cars, medical imaging, astronomy, photography and cinema, and robotic vision. As of now, the main problem surrounding the mainstream implementation of non-line-of-sight imaging is that existing methods are still difficult to pull off. It takes a large amount of computational power and technical know-how to reliable parse visual information buried in signal noise. Yet, the past 8 years have seen great advances in non-line-of-sight imaging techniques, so perhaps we are not far off from a world in which Blade Runner-esque tech is an everyday commodity.