Recent years have seen an increase in the number of robotic solutions geared toward social interactions with humans. Social robots are designed to collaborate and work with people, engaging them at an interpersonal and socio-affective level (Breazeal, Takanishi, & Kobayashi, 2008). They represent a departure from current technology, not only in terms of design (humanoid design) and behavior (simulation of psychological traits) but also in the range of proposed tasks (e.g. domestic chores, health care, education).

In order to contribute to the ongoing conversation about the best solutions for a successful human-robot interaction, we set out to explore the socio-cognitive processes underlying social robot use and acceptance.

The social representation of a robot

In order to explore the current meanings of the expression robot, we asked 212 persons: What are the ideas that first come to mind when you hear the word robot? (Piçarra, Giger, Pochwatko, & Gonçalves, 2016) This approach is called free evocation and is used in the study of social representations.1 We learned that people view robots as advanced machines (what) deployed in industrial and domestic settings (where) in order to assist and replace men in mundane, hard, and dangerous tasks (why) somewhere in the future (when). Our results are in line with those of the special Eurobarometer report on Public Attitudes Towards Robots (TNS Opinion & Social, 2012)2, underlining the gap between current lay visions of technology and iconic creations of the robot industry like Asimo (Honda) or Sophia (Hanson Robotics).

Attitudes toward robots

Social representations offer us a wide-angle view of socio-cognitive processes. In order to narrow our focus, we shift to attitudes. Attitudes can be broadly defined as a person’s evaluative stance toward a behavior and its expected consequences (e.g. enjoyable vs. unenjoyable, good vs. bad). Following the work of Nomura, Kanda, & Suzuki (2006), we set out to test the psychometric properties and predictive value of the Portuguese version of the negative attitudes towards robots scale (NARS). This was done through a set of 4 studies involving a total of 1002 participants.

Our analyses identified two factors, negative attitudes toward robots with human traits (NARHT), and negative attitudes toward interactions with robots (NATIR). This factorial structure was later confirmed with a Polish sample with 213 participants (Pochwatko et al 2015). These results suggest that peoples’ judgments about interactions with robots in general and robots with human traits are not overlapping, which brings some design challenges.

The NARS showed a good predictive value for attitude towards working with an android social robot and perceived behavioral control (i.e. the perceived ease or difficulty of performing the behavior, given the internal and external constraints on behavior and self-efficacy).

Intention to work with social robots

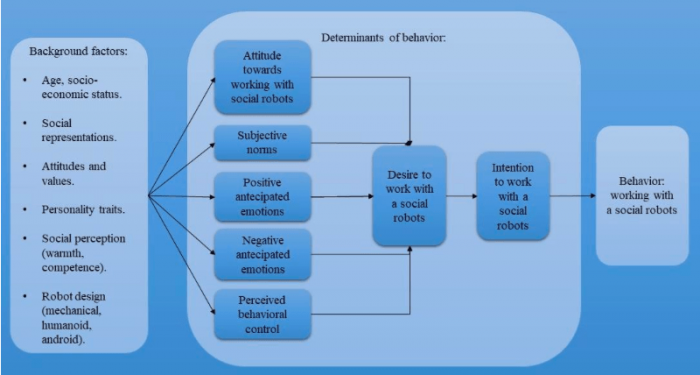

A person may have a positive attitude towards social robots and have no intention to use one. Socio-cognitive models contend that the proximal determinant of behavior is not attitude but a person’s intention to act (i.e. a person’s willingness to exert a certain effort in the pursuit of a behavior). Intention is determined by attitudes towards the behavior, perceived behavioral control, subjective norms (i.e. perception that important others will approve or not the behavior), and anticipated emotions (i.e. what a person imagines she will feel if she achieves to perform the behavior).

The model of goal-directed behavior (MGB, Perugini & Bagozzi, 2001) contends that these variables have their effect on intention mediated by desire (i.e. motivational commitment to perform a behavior). Variables like age, socioeconomic status, and personality traits are considered external to the model.

Using the MGB as our theoretical framework, we set out to study the intention to work with social robots (Piçarra, & Giger, 2018). For that purpose, we invited 217 participants to watch a video portraying a social robot and asked them about their willingness to work with such a robot in a near future. The video offered a brief definition of a social robot and described a set of tasks they will perform, ranging from hotel host to elder care. The participants were assigned to three conditions: watching a video displaying a mechanical robot, a humanoid robot or an android robot.

Our results offer some interesting insights regarding the intention to work with social robots. First, the role of anticipated emotions as determinants of desire and intention was confirmed. In fact, their effect surpassed that of attitudes towards working with social robots. Second, the results underlined the role of others’ evaluations, showing that subjective norms play an important role in determining intention. And third, all the variables had their effects on intention mediated by desire, underlining its motivational role.

Diagram of the conceptual model. Figure courtesy Nuno Piçarra & Jean-Christoph Giger

Social perception and social robots

Once the main model for the intention was tested, we set to study the effects of social perception and robot design. According to the stereotype content model (Fiske, Cuddy, & Glick, 2007), social perception answers two key questions: 1) do outgroup members have good (vs. bad) intention toward me and my group? (warmth); and 2) can outgroup members enact their intentions? (competence). The warmth dimension encompasses traits like friendliness and trustworthiness. The competence dimension encompasses traits like intelligence and efficacy.

Our results showed statistically significant effects for both competence and warmth. The largest direct effect of competence was on attitude towards working with robots, while the largest direct effect of warmth was on positive anticipated emotions, suggesting that the dimensions of warmth and competence might affect differentially emotional appraisal and attitudinal evaluations. This hypothesis, however, requires further confirmation.

Our analysis did not find any relation between robot design and the effects of competence on the model. The effects of warmth, on the other hand, seemed to be affected by robot appearance. Namely, participants who viewed the video with the android robot showed a significantly higher effect of warmth on attitudes towards working with social robots than those who viewed the video with the mechanical robot. And participants who viewed the video with the android robot showed a significantly higher effect of warmth on subjective norms than those who viewed the video with the humanoid robot. These, however, were small effects that preclude any conclusion.

What are the practical implications?

The results of the social representations study should alert us for the challenges posed by the gap between lay conceptions of robot and today’s socio-affective technologies. Since people use current knowledge to make sense of new information, there is a risk people will find the idea of a humanoid social robot overwhelming.

The two-factor structure identified for the attitude toward robots, suggests that people hold separate views for “how I feel about interacting with robots,” and “how I feel about robots with human traits.” This difference has serious implications for robot design. For example, a person may have a positive attitude toward the use of social robots in health care, as long as they do not exhibit too much “humanity.”

Given the role of anticipated emotions regarding the intention to work with social robots (e.g. working with a social robot in a near future will make me feel proud), promoting their use alluding to their mechanical and computational proficiency while ignoring workers’ sense of self-worth and career prospects might lead to decreased acceptance or rejection.

The role played by significant others, be it friends, co-workers, or managers, must also be considered. A person might favor working with social robots but refrain from doing it fearing a because they fear a negative evaluation from their peers. Or they may feel pressured to do it in order to fulfill management directives.

Since social robots are designed to engage people at the socio-affective level, the background effect provided by the social perception dimensions of competence and warmth should not be overlooked, as it may determine approach/avoidance tendencies. The effects of robot design on social perception were not conclusive, thus warrant further research.

Our results also showed that a positive view of robots per se may not guaranty their acceptance. Although participants presented a positive attitude toward working with social robots and viewed themselves as competent in doing so, this was not accompanied by high levels of desire or intention to do it. These results might just reflect the limited chances to interact directly with robots in daily life, or they might be already the consequence of the expectation gap identified in the social representations study.

These findings are described in the article entitled Predicting intention to work with social robots at anticipation stage: Assessing the role of behavioral desire and anticipated emotions, recently published in the journal Computers in Human Behavior. This work was conducted by Nuno Piçarra from the University of Algarve and Jean-Christophe Giger from the University of Algarve and the Centre for Research in Psychology – CIP-UAL.

Footnotes:

- The concept of social representation was developed by Serge Moscovici (1988). For a review of the structural model of social representations see J.- C. Abric (1993).

- The special Eurobarometer “Public Attitudes Towards Robots” (TNS Opinion & Social, 2012) reports that around 80% of the participants state that an industrial robot fitted well with the image they had of robots. When asked to forecast the employment of robots in housework and domestic tasks, 51% answered in 20 or more years’ time and only 4 percent said it was something already common.

References:

- Abric, J.-C. (1993). Central system peripheral system: Their functions and roles in the dynamics of social representations. Papers on Social Representations – Textes sur les représentations sociales, 2, 75–78 (Retrieved from 1993Abric.pdf)

- Breazeal, C., Takanishi, A., & Kobayashi, T. (2008). Social robots that interact with people. In B. Siciliano, & O. Khatib (Eds.), Springer handbook of robotics (pp. 1349-1369). Berlin, Heidelberg: Springer. https://link.springer.com/referenceworkentry/10.1007%2F978-3-540-30301-5_59.

- Fiske, S., Cuddy, A., & Glick, P. (2007). Universal dimensions of social cognition:

Warmth and competence. Trends in Cognitive Sciences, 11(2), 77-83. https:// doi.org/10.1016/j.tics.2006.11.005. - Pochwatko, G., Giger, J.-C., Różańska-Walczuk, M., Świdrak, J., Kukiełka, K., Możaryn, J., & Piçarra, N. (2015). Polish Version of the Negative Attitude Toward Robots Scale (NARS-PL). Journal of Automation, Mobile Robotics & Intelligent Systems, 9 (3), 65-72. doi: 10.14313/JAMRIS_3-2015/25

- Moscovici, S. (1988). Notes towards a description of social representations. European Journal of Social Psychology, 18, 211–250.

- Nomura, T., Kanda, T., & Suzuki, T. (2006). Experimental investigation into influence

of negative attitudes toward robots on human–robot interaction. Ai & Society,

20(2), 138–150. - Perugini, M., & Bagozzi, R. (2001). The role of desires and anticipated emotions in

goal-directed behaviours: Broadening and deepening the theory of planned behavior. British Journal of Social Psychology, 40(1), 79-98. - Piçarra, N., & Giger, J. C. (2018). Predicting intention to work with social robots at anticipation stage: Assessing the role of behavioral desire and anticipated emotions. Computers in Human Behavior, 86, 129-146.

- Piçarra, N., Giger, J. C., Pochwatko, G., & Gonçalves, G. (2015). Validation of the Portuguese version of the Negative Attitudes towards Robots Scale. Revue Européenne de Psychologie Appliquée/European Review of Applied Psychology, 65(2), 93-104.

- Piçarra, N., Giger, J. C., Pochwatko, G., & Gonçalves, G. (2016). Making sense of social robots: A structural analysis of the layperson’s social representation of robots. Revue Européenne de Psychologie Appliquée/European Review of Applied Psychology, 66(6), 277-289.

- TNS Opinion & Social. (2012). Public attitudes towards robots (Special Eurobarometer 382). European Commission. Retrieved from opinion/index en.htm