Every day people are exposed to harmful levels of noise. Noise pollution is one the most poorly controlled man-made pollutants and meeting the acceptable standard for community noise is a very challenging problem. In fact, in the transport industry, trying to meet these standards can be one of the main barriers to technological improvements and reducing operational cost.

We could have trains that go well over 300 km/hr if it weren’t for the fact that we would blow the eardrums of people living near the rail tracks. We could have cheaper flights if we were able to keep the airports open during the night. Because of these reasons, a lot of public and commercial research effort goes into studying man-made noise. And surprisingly, scientists are using cameras to do it.

But what do cameras have to do with sound? When most people think of a camera, they think of the ones on their smartphone. Perhaps, if you’re really an enthusiast, you’ll break out your DLSR for ultra high-quality photos. These cameras rely on a synthesis of reflected visible light waves to form images. Wave imaging of other types of waves is very common. For example, radio waves are used in radio astronomy to visualize large celestial bodies and high-frequency vibrations are used in ultra-sound to give you a picture inside the human body.

Over the last 20 years, scientists have developed technology to form visual images out of sound-waves to visualize where a sound is coming from in physical space. Instead of arrays of photo-receptors to detect light wave, arrays of microphones are used to detect sound waves. These sound waves are then synthesized using array processing algorithms to create images.

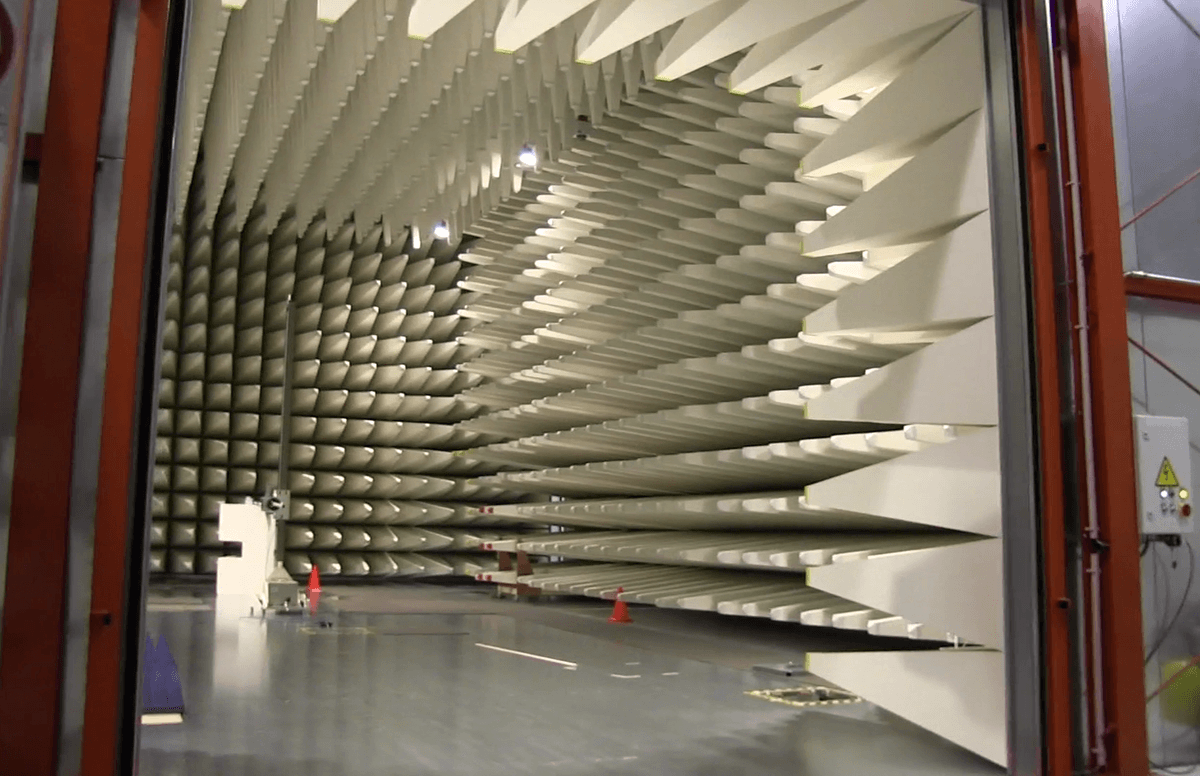

These acoustic cameras (known as ‘beamformers’ in the community) are typically used to study aerodynamic systems that make a lot of noise (like wings, wind turbines and the inside of cars) and figure out ways to reduce it. Beamformers are now a standard research tool in any aeroacoustic facility and have an active research community, including at NASA and DLR, who dedicate their research to improving the technology.

In most facilities, beamformers are located outside an open wind tunnel – a jet of air expanding out of a nozzle at a speed typically 10-20% of the speed of sound. Miniature models are placed in the air jet. The sound is generated through aeroacoustic mechanisms, travels through the air jet, and are eventually recorded by the microphones.

It is well known that sound travels differently in a moving air than stationary air. As human beings, we experience this every day as the Doppler Effect, for example where an ambulance siren will appear at a higher frequency as it approaches and a lower frequency after it passes you. It is also well understood that waves bend when changing from one medium to another. This is the reason why when you look at your hand underwater, it appears shifted from where it should be.

What this means is that the image that you create using the beamforming algorithm becomes distorted because the sound has to travel across the boundary of the air jet, also known as the “shear layer”. Therefore, you need to apply a mathematical correction to account for the distortion, similar to putting a pair of glasses on our acoustic camera.

The mathematical equations that describe this correction only exist when you assume a planar (two-dimensional) shear layer. In most cases, this assumption is not unreasonable since most beamformers are usually planar and are applied to planar shear layers.

However, in reality, air-jets are three-dimensional surfaces that assume the shape of the outlet nozzle. This means they can be circular, rectangular or even octangular in shape. Additionally, recently there has been some research effort focusing on three-dimensional beamforming, which makes 3D holograms of noise propagation instead of simple 2D images. Three-dimensional beamforming can only be achieved if the microphones are placed around the air nozzle picking up noise from different directions. If this is the case, we can no longer assume that the beamformer is planar, and thus the classic shear layer correction breaks down. The only option here is a very computationally expensive approach called ray tracing that requires following the path of every single sound ray to every single microphone from every possible source of the noise. This is not practically feasible for most researchers who need rapid results. Therefore, a new, computationally inexpensive shear layer correction technique is required.

The team at UNSW has solved this issue by formulating a set of simple equations that will help correct for a three-dimensional shear layer. The equations make no assumptions about the shape of the shear layer. The equations give precisely the same result as the 2D case but can be extended into the 3rd dimension.

In order to test the new correction method, the team collaborated with a team at Brandenburg University of Technology, who happen to have a wind tunnel with a circular shear layer. We took their acoustic beamforming data for a wing and compared our correction method with no correction. We found that our correction method successfully corrected for the distortion and was able to do so in a fraction of the time that it would have taken to ray trace.

So, the next time you hear a plane loudly passing by, know that there is an active body of researchers working to make your life more comfortable. And, with the new generalized correction method, a scientist can now rapidly study different aeroacoustic shapes using 2D and 3D beamforming making 24h airports in residential areas a reality sooner rather than later. This spells good news for anyone living near an airport (but I wouldn’t be rushing to buy the Kerrigan’s house just yet).

These findings are described in the article entitled A correction method for acoustic source localisation in convex shear layer geometries, published in the journal Applied Acoustics. This work was led by Ric Porteous from the School of Mechanical Engineering, The University of Adelaide.