, viVery often it is only a matter of time before something in science-fiction becomes science-fact. This past week tech giant Google held an event in San Francisco where it unveiled products like the Google Home Mini, a new Chromebook, and its new version of the Google Pixel phone. One of the most intriguing announcements at the event was Google’s new Pixel Buds which are reportedly capable of translating up to 40 different languages by using the Google Translate technology.

Regarding the Pixel Buds, media sources have made a number of allusions to Douglas Adam’s Hitchhiker’s Guide to the Galaxy and its Babelfish that allowed anyone to understand any language simply by putting a fish into their ear. Artificial intelligence has enabled this concept to come out of the realm of fiction and into reality.

The Google Pixel Buds require a Pixel phone to get the translation benefits out of them, but if you’re wanting to translate French all you have to do is speak into the right earbud and ask it to “Help me speak French”. The next time you speak, your earbuds will play a translation for you in French, and if someone spoke French to you, you would hear the translation through the earbuds.

Google describes it as having “your own personal translator with you everywhere you go”. Initial demonstrations of the technology are impressive, able to translate on the fly with no discernible lag and remarkably adept at picking up speech from others.

Superior Translation with Neural Networks

Google’s Pixel Buds are possible through the use of artificial intelligence, specifically through its new Google Neural Machine Translation framework. The Neural Machine Translation system utilizes an artificial neural network to enable the use of machine learning algorithms. Machine learning refers to the method by which a computer is able to learn without being overtly programmed to do so. An artificial neural network is an information processing system that utilizes a system of nodes and connections, similar to a human brain. This artificial neural network is capable of reinforcing connections between artificial neurons, enabling it to learn and make associations between concepts, thus improving translation ability.

Last year Google boasted that the incorporation of neural machine translation into the translation software resulted in massively improved translation accuracy and efficiency. The use of neural machine translation allowed Google Translate to process whole sentences at one time instead of “chunking” sentences and translating those sentence chunks. Google explained that the result of this whole sentence translation is a translation that is more natural, with improved grammar and syntax.

Said Barak Turovsky, Google Translate project leader.

It has improved more in one single leap than in 10 years combined.

Google Neural Machine Translation’s employment in the Pixel Buds is a natural extension of the process that allowed for a better translation. However, if the 40 languages that the Pixel Earbuds can currently translate seems impressive, Google Translate actually supports around 103 languages. Scaling the improved translation network up to all 103 of the languages supported was quite the challenge, however.

This problem was solved by the use of “Zero-Shot Translation”. Zero-Shot Translation effectively enables the neural machine translation system to translate between multiple languages without requiring any change in the base system.

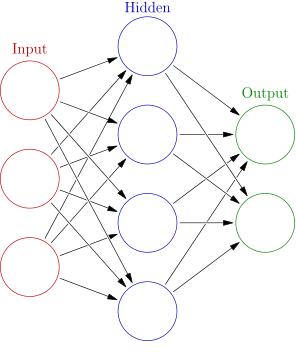

Illustration of a neural network. “Colored neural network” by Glosser.ca via Wikimedia Commons, is licensed under CC-BY-SA 3.0

Zero-Shot Translation

Neural networks are made up of layers. The first layer is the input layer, where the data and associated patterns are first presented to the network. Then there’s any number of hidden layers where the processing of the data is done via adjusting weighted connections, similar to the connections between neurons being reinforced. Finally, there is an output layer where the adjusted data is displayed. In natural language processing, a “token” is used to denote a piece of data as a useful semantic unit to be used in processing.

Google’s research blog explains that the system is able to transfer knowledge about the translation of one pair of languages to another pair of languages. Zero-Shot Translation works by adding another token at the start of the input sentence which specifies a certain language for the framework to translate to. In an example given by the blog, the Google Neural Machine Translation is used to translate Japanese to English and Korean to English. The systems’ parameters are shared between the pairs of languages, and the system uses its ability to model what it has learned about one language and extend this knowledge to the other languages. This means that Zero-Shot Translation is able to translate between the pairs of languages never actually encountered by the system before.

“This inspired us to ask the following question: Can we translate between a language pair which the system has never seen before?”, the translation team said.

In the given example, Koren to Japanese and Japanese to Korean translation pairs were never shown to the system. However, the system was able to generalize using translation knowledge gained about other pairs and generate reasonable translation between Korean and Japanese.

The success of the Zero-Shot translation technique was the first instance of transfer learning between languages in the history of machine learning. It also raised the question of how the system was able to effectively generalize from the translation knowledge. The researchers hypothesized the system was hitting on a common representation of concepts, sentences that have the same meaning being represented similarly regardless of the language that they are expressed in. They tested the hypothesis by examining 3D representations of the data from the neural network.

The data the system generated as it translated between all possible pairs of English, Korean, and Japanese suggested the network was indeed picking up on something regarding the semantics of the sentence, a manifestation of an “interlingua”.

Google is certainly not the only company to employ machine learning to translate audio and text. Microsoft’s Skype platform can translate between eight different languages during a real-time video or voice conference, and it can translate between 50 different languages when doing text chat.

As artificial intelligence advances, so to will the ease and speed with which humans communicate with each other. The language barrier appears to grow ever smaller with each advancement.