As research into artificial intelligence speeds up, and it spreads into more and more technologies, the ethics of AI use is likewise becoming much more relevant. In order to keep a firm grasp on the societal and ethical impacts of artificial intelligence, the UK-based artificial intelligence company DeepMind has created a special research unit devoted to investigating ethical issues surrounding AI use.

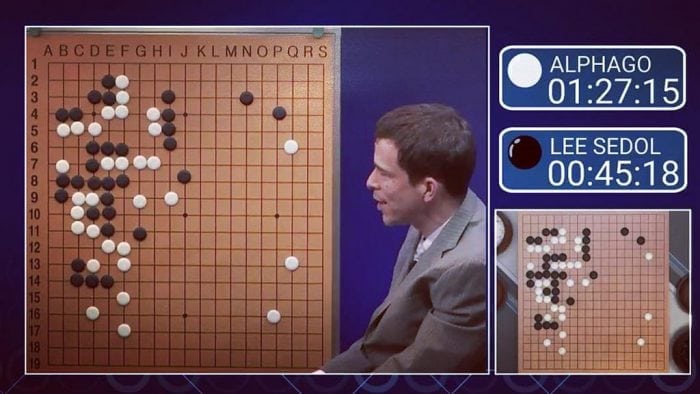

DeepMind was acquired by Google back in 2014, and the company specializes in applying artificial intelligence and machine learning techniques to tackle problems around the world, including issues in the energy and health sectors. Though the company is most known for its AlphaGo program, which was able to beat humans at the game Go for the first time ever, DeepMind is pursuing a wide variety of applications for AI.

“As scientists developing AI technologies, we have a responsibility to conduct and support open research and investigation into the wider implications of our work,” reads a blog post created by Sean Legassick by DeepMind’s Verity Harding, both co-leads of the Ethics and Society unit.

The post continues:

At DeepMind, we start from the premise that all AI applications should remain under meaningful human control, and be used for socially beneficial purposes. Understanding what this means in practice requires rigorous scientific inquiry into the most sensitive challenges we face.

Match 3 of Lee Sedol vs AlphaGo. Photo: Buster Benson via Flickr. Image is licensed under CC-BY SA 2.0

The Founding of DeepMind’s Ethics and Society Unit

The post explains that the job of Ethics and Society unit is to ensure that artificial intelligence is used in a way that is truly responsible and beneficial for society.

Deepmind’s new Ethics and Society unit already consists of six staff members who have backgrounds in areas like ethics, law, and philosophy. DeepMind wants to expand the team to 25 people next year.

The co-founder of DeepMind, Mustafa Suleyman, stressed how important it was to create technology with the ethical implications of it in mind. Suleyman says that as a company it is attempting to surround their engineers and researchers with insightful perspectives on the consequences of the technology they are developing.

Apart from the current staff, and the 25 eventual employees that will work in the unit, the Ethics and Society unit will be advised by a panel of six “fellows”. The fellows are independent advisors and industry experts that will provide the unit with critical guidance and feedback. The list of fellows includes Nick Bostrom, writer of Superintelligence and Oxford University Professor, Jeff Sachs, director of Columbia University’s Earth Institute, and economist Diane Coyle.

The ethics unit will be tasked with considering the implications of artificial intelligence and machine learning algorithms on everyday situations. For instance, artificial intelligence trained on biased algorithms can exacerbate existing societal problems. A recent investigation by ProPublica found that a model used to estimate recidivism rates suggested that African-American defendants in Broward County Florida were twice as likely to re-offend as White defendants, even when independent investigation decided they weren’t.

While algorithms aren’t trained on data like race or other variables that could be used to discriminate against someone, algorithms that use variables which are correlated with race or sex (such as the quality of houses or schools in an area) can end up discriminating accidentally. These variables end up acting as proxies for things like race, sex, or age. Part of designing ethical algorithms and systems is creating algorithms that cannot unintentionally discriminate against people, which involves getting rid of variables that can act as proxies for sensitive categories.

Nick Bostrom has lectured on the dangers of misaligned AI. Photo: Future of Humanity Institute via Wikimedia Commons, CC BY-SA 4.0

The Unit’s Guiding Principles

To achieve their goal of dealing with problems like biased algorithms, the DeepMind Ethics and Society team have delineated five principles that they will follow.

The five governing principles of the Ethics and Society unit:

1) Artificial intelligence should have social benefits. AI should be created in ways that will serve the good of both the environment and societies around the globe.

2) Techniques and research should be rigorous and evidence-based. Their research on the impacts of AI will be open to peer review and critical feedback.

3) Research will be transparent and open. The Ethics and Society unit aims to be transparent about who they are working with and what projects they are working on. Their research grants will be unrestricted, and no attempt will be made to influence outcomes of studies they commission. Collaboration done with extra researchers will involve disclosing of any funding that they have received from the unit.

4) The group will aim to be interdisciplinary and diverse. The unit wants to include a wide range of voices in their research, using the strength of different disciplines and unique viewpoints.

5) The unit wants to proceed in a spirit of collaboration and inclusivity. They want to support a large amount of both academic and public dialogues about artificial intelligence, allowing outside voices to contribute, to ensure that AI is benefiting all of society.

Scrutiny and Accountability

The development of the Ethics and Society unit comes after a decision earlier this year that London’s Royal Free Hospital breached a UK data privacy law when it handed over data on over 1.6 million patients to DeepMind. This has led to the company being put under a certain amount of scrutiny. Suleyman demonstrated that he was aware of the criticism, and reassured that he shared the same concerns about ethics that others have.

Says Suleyman:

“I might sit here with hindsight, as I did with the independent reviewers on DeepMind Health, in a year and be like, ‘Yup, this isn’t right, that’s not right’. And I’m sure we probably will be [under scrutiny] because this is surprising to people and they are going to ask odd questions like, ‘Isn’t this just in-housing’ and all the other normal concerns that people are going to raise. I think we have to be a little bit resilient to that default skepticism. I respect it, I know the usual characters will have the same old concerns, which I also have. My heart is in their place.”

To help ensure accountability, a panel of reviewers will review the conclusions of the unit. The panel of reviewers includes editor of The Lancet, Richard Horton, and former digital officer for of the UK government, Mike Bracken.

DeepMind is certainly not the first company to express worries about the misuse of AI, and to take measures to make sure that it is employed ethically. Industry leaders like Elon Musk and Mark Zuckerberg have sounded off on the potential dangers of AI being misused. Furthermore, research done by Kate Crawford and Ryan Calo warned that “there are no agreed methods to assess the sustained effects of such applications [autonomous systems] on human populations.” The DeepMind blog post specifically acknowledged and referenced Crawford and Calo’s work.

As research into artificial intelligence moves on, the role of groups like the Ethics and Society unit will become ever more important. In the meantime, the team has affirmed their commitment to pursuing the ethical implementation of AI.

“If AI technologies are to serve society, they must be shaped by society’s priorities and concerns,” says Harding and Legassick.