When a person wishes to perform a certain action, this desire is characterized as an intention. Actions are the physical tools used to realize intentions. From the perspective of an observer, others infer a person’s intentions from their behavior. For example, when Jack intends to hold a bottle (intention), he moves his hand toward the bottle and grasps it (action). As for Jill, she can tell that Jack will grasp the bottle based on the movement of Jack’s arm and hand (inferred intention).

For people suffering from illnesses or injuries that limit movements, such as quadriplegia and spinal cord injury (SCI), however, actions are not guaranteed to reflect the underlying intentions. Even activities of daily living, such as holding a bottle, may not be possible due to physical impairment. While there are a number of wearable robots that have been introduced to assist them, it remains challenging for robots to help users freely manipulate objects by correctly identifying when the user wants to grasp or release a certain object in their environment.

To deal with this issue, previous research has suggested bio-signal sensor- and mechanical sensor-based methodologies to detect users’ intentions. Bio-signal sensors, such as electroencephalography (EEG) and electromyography (EMG), are often used to detect the electrical signals that are generated by the brain and transmitted to the muscles. Activations of bio-signals greater than a certain threshold, which is usually specified by machine learning classifiers, are commonly regarded as user intentions. As for mechanical sensors, pressure sensors, bending sensors, and button switches are mostly used to detect user intentions. Pressure sensors provide contact information between wearable hand-robots and the objects they interact with in the form of grasping intentions. Bending sensors measure wrist or finger joint angles, while binary buttons simply convey the user’s intention to a robot.

However, these existing methods have some issues to deal with in order to properly identify user intentions. For bio-signal sensors, it is important to address issues that arise due to their dependency on the user. For example, person-to-person calibrations are required to accurately utilize them. Pressure sensors are also not applicable for people with fully impaired hand function due to “release issues” when using them. Other mechanical sensors usually require additional body movements to clearly interpret user intentions, sometimes requiring users to perform unnecessary and unnatural actions.

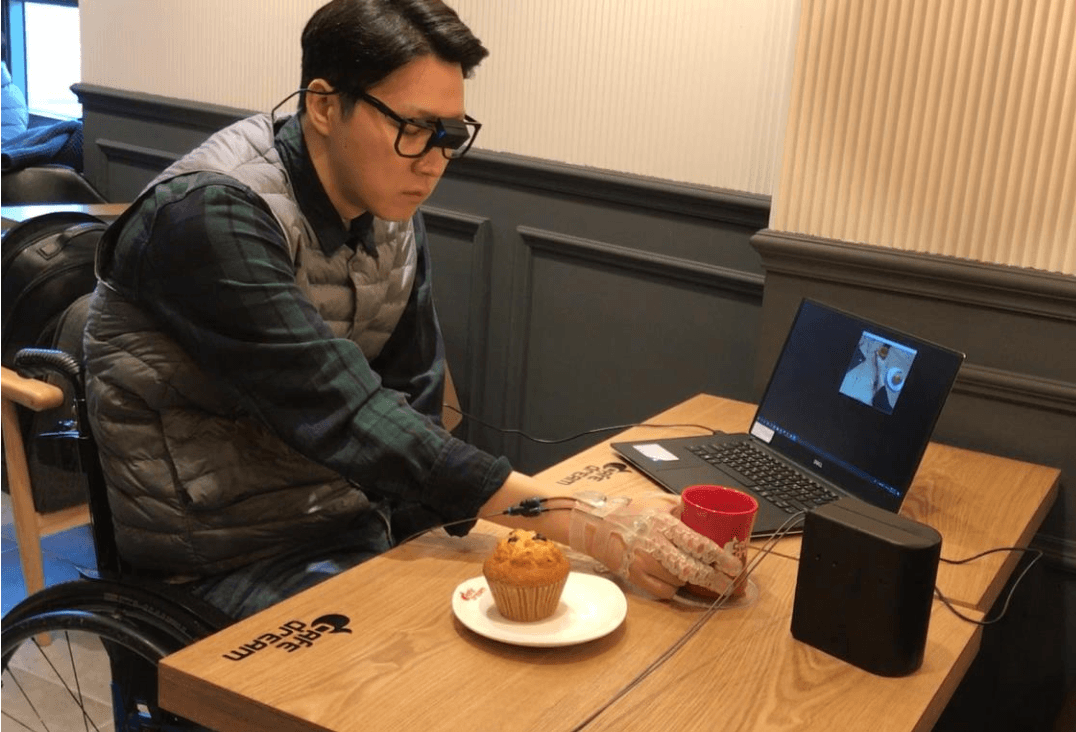

Our study aims to present a new paradigm of perceiving user intentions for wearable hand robots. We hypothesized that user intentions are obtainable through the collection of spatial and temporal information by using a first-person-view (egocentric) camera. Spatial information provides the hand-object relationship of the current scene while the history of user arm behaviors as temporal information can be analyzed to infer user intentions. We proposed a deep learning model, Vision-based Intention Detection network from an EgOcentric view (VIDEO-Net), to implement our hypothetical design. Exo-Glove Poly II, a wearable robot hand that is driven by tendon actuation, is used to validate our proposed method.

The intentions obtained by the suggested paradigm and the intentions that were retrieved from the measured EMG signals were compared to verify the accordance of the user’s true grasping and releasing intentions and the intentions detected by our system.

The detected intentions of our system preceded user intentions measured by EMG signals for grasping and releasing by at most 0.3 second and 0.8 seconds, respectively.

From our results, we can verify that our model successfully detects the intention of the user, seen in the measured EMG signals, to grasp or release objects.

The subjects of our experiment included subjects with no physical disabilities and an SCI patient. Subjects were required to perform pick-and-place tasks. The performances of the subjects with no physical disabilities were analyzed with the average grasping, lifting, and release time of different objects while using our model. The SCI patient was instructed to grasp and release target objects by only reaching towards them and not performing any additional actions.

Because our approach predicts user intentions based on user arm behaviors and hand-object interactions through obtained visual information, it is advantageous in that it does not require any person-to-person calibrations as well as additional actions. In this manner, the robot is able to interact with humans and augment human ability seamlessly.

These findings are described in the article entitled Eyes are faster than hands: A soft wearable robot learns user intention from the egocentric view, recently published in the journal Science Robotics.