One of the least desirable outcomes of the digital age is a pandemic of misleading and deceptive misinformation and disinformation in digital media, especially social media. The popularized term “fake news” conflates a great many forms of both misinformation and disinformation. Preventing or even managing the “fake news” problem requires an understanding of how and why deceptive content propagates in media, the effects it produces, and its vulnerabilities.

The superficial problem of how deceptive messaging propagates in media has been well studied, using a range of epidemiological models that show very similar diffusion behavior to biological pathogens in victim populations.

The deeper problem of why this behavior arises has been less well studied.

We were especially interested in two questions, both highly relevant as a result of observing the mayhem produced by “fake news” in politics and the “viral” propagation observed in social and other digital media, clearly fuelled by the advertising-driven “Internet economy.”

The first question was how much deceptive effort is required to disrupt consensus in a group, given deceptions that introduce uncertainty.

The second question was how sensitive is “fake news” to the costs incurred by deceivers introducing it into a population.

We employed an evolutionary simulation to model the population, in which all of the individual agents in the population were playing the well-known iterated prisoner’s dilemma game, widely employed for modeling social systems. In such simulations, the agents in the population play various strategies, such as tit-for-tat, always-defect, or always-cooperate, and payoffs from these repeated games determine fitness, and thus which strategies in the population thrive, and which die out. This is a good model for capturing the behaviors of smaller populations of “influencers” in social media, who typically seek to form a consensus on some issue being debated. Social media followers in turn typically align to the consensus.

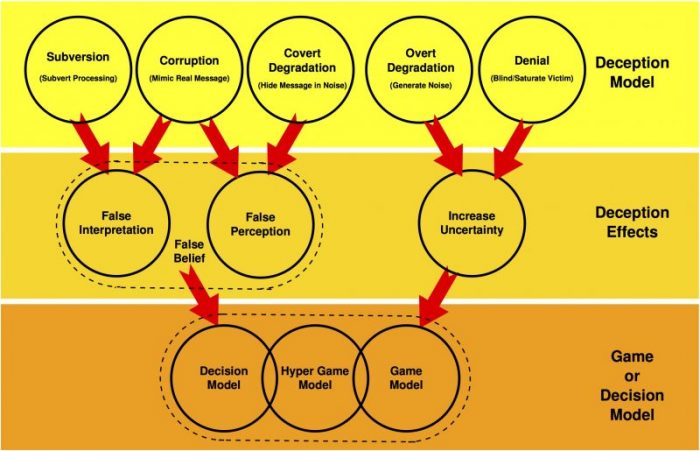

This diagram shows the mapping of the four information-theoretic deception models into deception effects for use in game and decision models. This is an important advance as game and decision theorists can now incorporate deceptions using a mathematically robust method to calculate deception effects. Image published with permission from Carlo Kopp

Modeling deception presented a bigger challenge. The problem of how to incorporate deceptions into game theory models is widely regarded to be unsolved, although good but incomplete solutions have existed since the 1980s. Both proposed solutions involve using the effect of a deception, either a false belief or uncertainty, as an input into the game model.

How do we map a specific type of deception commonly seen in social media into an effect that we can use in a game?

That problem was solved almost two decades ago by information warfare theorists, two of whom independently determined that three of the four common deceptions can be modeled using Shannon’s information theory and, more specifically, the ideas of channel capacity and information theoretic similarity.

This led us to the solution to the problem of incorporating deceptions into games. Take a deception type, map it into one of the four information theoretic deception models, determine the effect of the deception, and use that effect as an input to a game model.

In our simulation, we made some of the game playing agents capable of deception, so they could manipulate opposing agents and produce the effects of deceptions in their opponents.

We modeled two types of deception. The first was Degradation, which introduces uncertainty into the victim’s perception and models the effect of incoherent or random misinformation. The second was Corruption, which introduces false beliefs into the victim’s perception and models the effect of coherent disinformation, such as political propaganda.

To measure the sensitivity of deception effort to costs, we subtracted a cost from the payoff of every game played. This reflects the real world, where deception does require investing additional effort that is wasted when the deception fails.

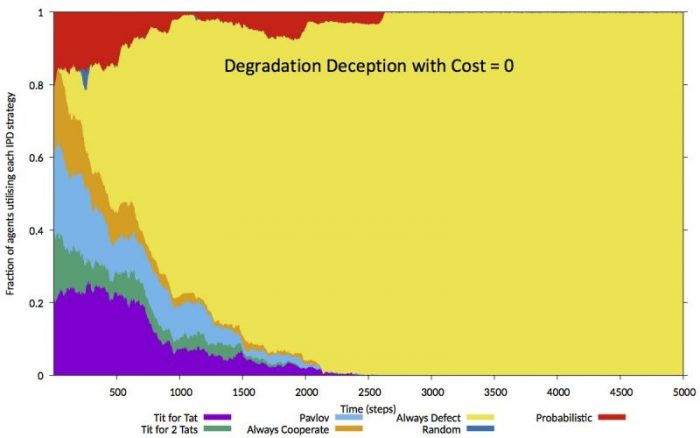

This plot shows the evolution of the agent population over time, for the special case of cost-free deceptions. The Degradation deception introduces uncertainty into the victim’s perceptions. The population is invaded by agents that play the selfish Always Defect strategy, of which a fraction are also actively employing the Degradation deception, and these agents displace all other agents by 2,500 time steps in the simulation. Where deception incurs no costs, everybody has to exploit deception to survive. Image published with permission from Carlo Kopp

Analysis of results from several thousand simulation runs showed some unexpected results.

Even very small numbers of deceiving agents, around 1 percent of the population, significantly disrupted cooperative behaviors in the population, while both deception types proved to be much more sensitive to the cost of deception than expected. We also tested the diffusion behavior of the deception in the population, with results that proved remarkably close to the fit seen with epidemiological models and real social media datasets.

What are the implications of these findings for dealing with the real world problem of “fake news”?

The first conclusion is that deceptive content, whether distributed in social or mass media, can produce disproportionately disruptive effects in communities attempting to cooperate to form a consensus. Exactly how much is, of course, an empirical problem specific to the community in question, the topic of debate, and the nature of the deceptive content. There are many examples of “viral” reports devoid of substance causing major upheavals in political debates.

The second conclusion is that costs incurred in creating and propagating deceptive content critically determine whether the deception can establish itself in a population, let alone thrive and overwhelm the population. The special case of zero cost for both deception types saw cooperative behavior in the population vanish entirely. Conversely, where the cost of deception was high enough, the population behaved much like the special case of a deception-free population.

The latter has important implications for the real world of social and mass media, as the current “Internet economy” model sees no costs attached to the propagation of deceptive content, and, often, an actual profit from advertising revenues on websites hosting deceptive content. Digital technology has also dramatically reduced the cost of producing deceptive content, exacerbating the propagation problem.

In conclusion, an evolutionary iterated prisoner’s dilemma simulation that incorporated information-theoretic deception models was able to capture many key behaviors observed in real-world populations exposed to “fake news,” including demonstrating strong disruptive effects to cooperative behaviors, high sensitivity to the cost of deception, and similar diffusion behaviors.

These findings are described in the article entitled Information-theoretic models of deception: modeling cooperation and diffusion in populations exposed to “fake news”, recently published in the journal PLOS ONE. This work was conducted by Carlo Kopp and Kevin B. Korb from Monash University and Bruce I. Mills from the University of Western Australia.