The 21st century has brought a new landmark to the observational radio astronomy. Astronomers are now able to detect radio signals from a plethora of cosmic sources at various cosmological scales. Multiple detections show that periodic radio pulsations come mostly from numerous rapidly-rotating neutron stars known as radio pulsars located in the Milky Way, while much brighter single pulses are attributed to a few little-studied and far more distant fast radio bursts.

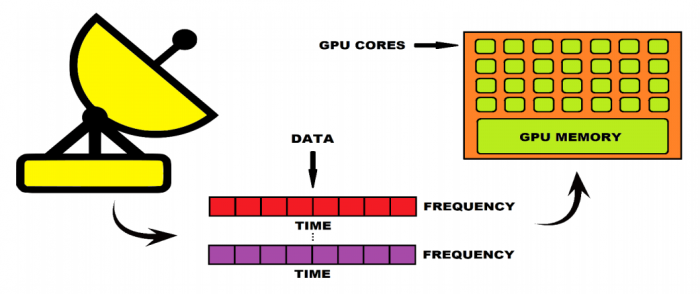

To grasp the origin and properties of these pulses, astronomers need telescopes with tremendous time and frequency resolution. To achieve those, modern radio surveys now operate in real time on large supercomputers with graphic processing units (GPUs) and therefore utilize enormous data rates and computational power. Among them is the new real-time Apertif survey that combines 12 equidistant dishes of the Westerbork Synthesis Radio Telescope in the Netherlands (Fig. 1). All 12 dishes are equipped with the high-resolution cameras that together provide a very large field of view equal to 40 times the size of the Moon. The produced amount of data requires a Top500 candidate ARTS supercomputer with a receiving data speed larger than the total Dutch internet traffic.

As every radio survey, Apertif is limited in its search for new radio flashes. Observed area of the sky, telescope sensitivity, spectral resolution, noise threshold – all these factors determine to what extent the survey will be successful in detecting new pulses. Within such limitations, one wants an optimal distribution of computational resources on graphical processors so that all telescopes perform real-time observations at maximum search capability (Fig. 2). A traditional way to find such optimal configuration of computational resources is to perform an auto-tuning each time the telescopes get upgraded. A complete scan of all possible configurations is known as a brute-force search. Data processing involves several subsequent operations that depend on each other. Probing every single possible configuration for all operations together with brute-force would take more than 100 billion years! Therefore, brute-force can only optimize configurations per single operation, but even that may take from 10 hours to 1 day.

Figure 2. The data from the radio telescope comes in a three-dimensional format: time (X-axis), frequency (Y-axis), estimated distance to the source (color). These data then need to be optimally distributed (tuned) across GPU computational resources (cores and memory) to assure real-time processing. Typical radio observations involve more than 1015 possible configurations, impossible to tune with brute-force. Figure courtesy Klim Mikhailov.

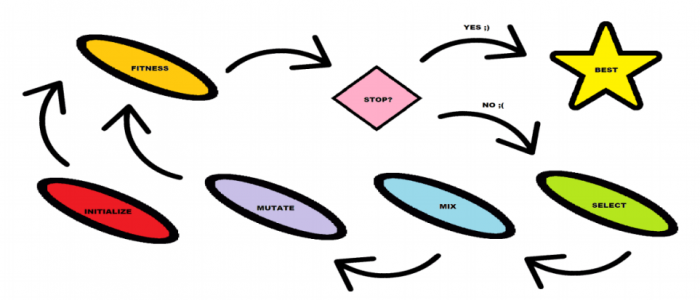

An alternative heuristic approach proposed in our paper (arXiv pre-print here) allows to only explore and improve already efficient configurations, thereby significantly (at least 5 times) reducing search time and allowing quick telescope upgrades. Additionally, it optimizes all operations at once, and thus also takes interactions between separate operations into account, prohibiting configurations that are not possible based on previously obtained ones. This new approach applies genetic algorithms, a type of optimization where good configurations get selected and mixed with each other, in an attempt to produce better configurations. The measure of “goodness” corresponds to the fitness function, in our case data processing time. In the end, only the most optimal configuration gets chosen (Fig. 3).

Figure 3. Schematic representation of a genetic algorithm. Initialized configurations are first evaluated in terms of their fitness functions. The best ones get selected and mixed with each other, some also get randomly changed (mutated) to further explore parameter space. The updated configurations then undergo fitness evaluation again. Once the stopping criteria (maximum number of algorithm iterations or desired fitness value) is reached, the best configuration gets selected and the algorithm stops. Figure courtesy Klim Mikhailov.

Genetic algorithms constantly improve initially good solutions and explore the rest of the parameter space at the same time – a weighty advantage over exhaustive and other local optimization (e.g. gradient descent) searches. They are also a perfect choice if one wants to quickly get a reasonably good solution in a multidimensional parameter space without big computations. Finally, heuristics are platform-independent and can thus always be transferred to larger surveys.

These findings are described in the article entitled The Apertif Monitor for Bursts Encountered in Real-time (AMBER) auto-tuning optimization with genetic algorithms, recently published in the journal Astronomy and Computing.