Distance measurement is an important challenge in astronomy. Between the parallax of nearby stars and the redshift of the most distant galaxies, there are a lot of steps. With the Dark Energy rush, several large astronomical surveys are trying to understand the accelerated expansion of the universe. The Dark Energy Survey (DES) has just completed its observations last January, cataloging hundreds of millions of galaxies.

Obtaining precise distance measurements of such a large number of galaxies is a hard task. To address this problem, photometric redshifts (photo-z’s) have been largely used by the astronomical community. Although less accurate than spectroscopic ones, they are cheaper and faster (regarding the number of galaxies measured per exposure time) and, hence, allow observations to go beyond signal-to-noise ratio limits of spectroscopic observations.

The complete procedure to estimate photo-z’s involves many steps. First, it requires the handling of a multitude of spectroscopic datasets for calibration, matching between the sources on these spectroscopic datasets and the ones at the photometric survey, training of empirical algorithms (e.g.: neural networks and random forest), validation of these algorithms (e.g.: bias and variance), and, finally, measuring photo-z’s for large samples.

In this work, we address how the DES Science Portal infrastructure presents itself as a solution to connect all these steps in a stable environment, ensuring consistency and provenance control. The Portal is a web-based tool that combines a web application, a workflow system, a cluster of computers, and two databases. It is developed collaboratively using GIT by a large number of IT and science people geographically spread across Brazil. We also have contributions from DES members in several other countries among DES participant institutions.

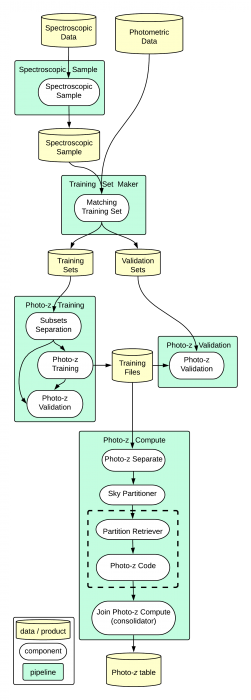

The chain of tasks mentioned above is executed in the Portal as a modular workflow where intermediate products are created and consumed by the next phase, as shown in the schema (Figure 1). The data, represented by yellow cylinders, flow through pipelines (in green), which are self-consistent blocks that can be composed of one or more independent components (white boxes). For data-intensive tasks, like the computing of photo-z’s for large samples, these components run in parallel in a cluster of computers (components delimited by the dashed line).

Image republished with permission from Elsevier from https://doi.org/10.1016/j.ascom.2018.08.008

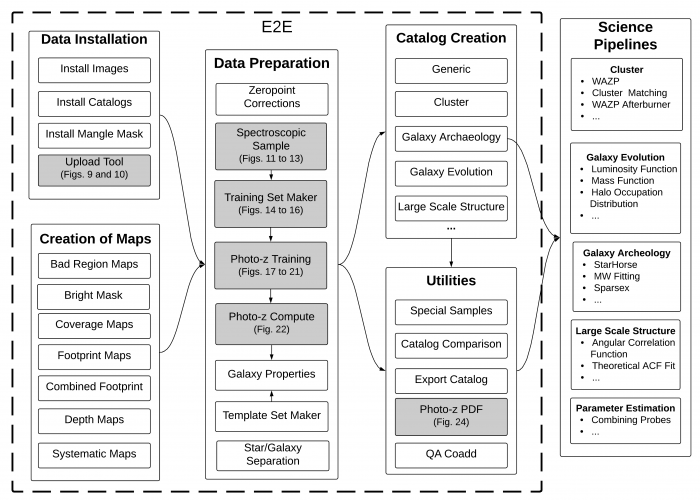

We use data from DES Y1 internal release for illustrative examples of running the chain of pipelines related to photo-zs in the Portal. These pipelines are part of a larger group that is used to create science-ready catalogs (Figure 2), and connect directly to Scientific Workflows (see Fausti et al. 2018 for further details). We explore different configurations of parallelization using one photo-z algorithm as an example. We study how the duration of the execution depends on the size of the data chunk analyzed by computer node, forecasting the optimal choices for running on future DES data releases.

Image republished with permission from Elsevier from https://doi.org/10.1016/j.ascom.2018.08.008

The Portal is a useful platform for long-term projects involving a large number of collaborators and requiring the analysis of large amounts of data, keeping critical information (input data, code version, configuration parameters, output files, and results) for processes executed within its framework that can be recovered at any future time. It is a powerful tool in the era of projects such as LSST, among others.

These findings are described in the article entitled DES science portal: Computing photometric redshifts, recently published in the journal Astronomy and Computing.