Bottom-up processing is a form of information processing. The term is often used in psychology to describe how information is first processed at the bottom level of the brain, in the simplest structures that perceive a stimulus before being carried upwards towards more complex levels of analysis. The term bottom-up processing is also sometimes used in fields like nanotechnology and computer science. Let’s take a look at some examples from different fields and contrast it with another form of processing – top-down processing.

Bottom-Up Processing In Psychology And Neuroscience

The concept of bottom-up processing explains how the perception of stimuli is handled within the brain. It explains that when a stimulus is initially perceived by us, the perception begins at the lowest layers of the brain and filters upwards, the stimulus undergoing increasingly complex analysis until the stimulus has been properly analyzed and a representation of the stimulus has been made in our minds. According to this theory of bottom-up processing, our entire conscious experience is based on the perception of sensory stimuli that are pieced together.

The brain processes images by making initially comparisons between lines to finds basis geometric shapes which are then fit together. Photo: Alexas_Fotos via Pixabay, CC0

Our brain receives signals from our sensory organs, and these organs are our way of making sense of the world around us. When one of our sensory organs encounters stimuli, it converts those stimuli into neural signals and passes them along our various sensory pathways to the brain, where the information will be processed and understood.

The bottom-up processing theory was created by the psychologist EJ Gibson. Gibson challenged older ideas that the nature of perception was dependent on context and learning, and instead hypothesized that perception and sensation were one and the same, with the brain simply analyzing sensory input at different levels. Gibson’s theory is occasionally referred to as the “ecological theory of perception” since it must be placed in the context of perceiving environmental stimuli to make sense.

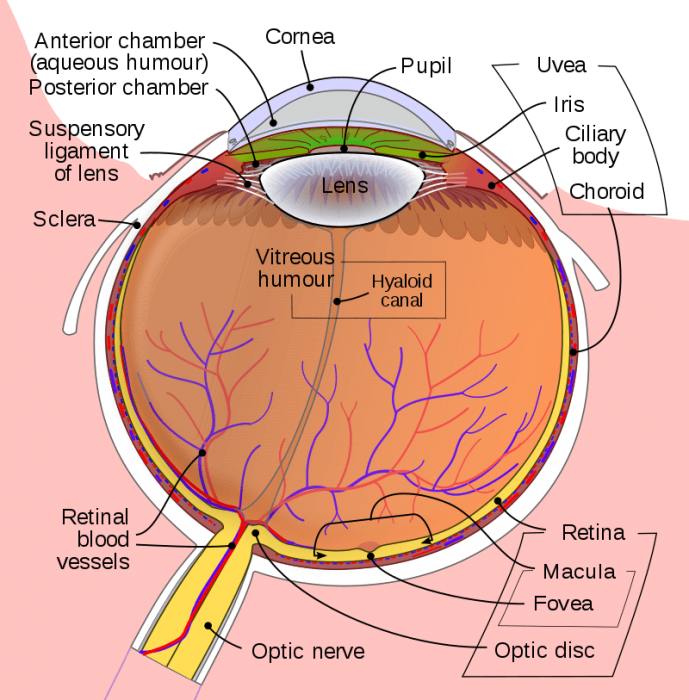

As an example of bottom-up processing, let’s examine how stimuli travel from the eyeball to the brain where they are processed. When we see perceive anything, like a color, to begin with, light waves bounce off objects in the world around us, being reflected towards our eyes. These light waves enter our eye, where the stimuli are collected by collections of sensory cells called rods and cones. The light signals enter the retina where they hit the rods and cones, which transform the signals into electrical impulses. These electrical impulses can then be carried along the optic nerve and into the visual portion of the brain. After arriving in the brain via visual pathways, the visual cortex processes these signals and our visual experience of the world is created.

Photo: By Rhcastilhos. And Jmarchn. – Schematic_diagram_of_the_human_eye_with_English_annotations.svg, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=1597930

A crucial part of bottom-up processing, at least when it comes to visual processing, is the detection of invariant features. Invariant features are features or attributes of the environment that remain constant. These invariant features supply us with a baseline that we can compare other features of the environment against. Consider how parallel lines seem to converge to a linear point, a point on the horizon. This is an example of an invariant feature. The ratio of objects below the horizon and above the horizon will remain constant for objects at the same point on the ground and of the same size.

This method of explaining perception is an example of reductionism, the analytical method of breaking down processes into their most basic parts to see how they work together. In contrast, some methods of explanation look at a process more holistically.

Top-Down Processing

Bottom-up processing is the conceptual opposite of top-down processing. The idea behind top-down processing is that a person’s previous expectations and experiences influence their perception of stimuli. Contextual clues in the environment are used to interpret info. Let’s make the difference between the two forms of processing more explicit by comparing top-down processing with bottom-up processing.

In the case of bottom-up processing, the process of sensory analysis starts with the collection of stimuli, with what our sensory organs detect. The sensory information is brought to the brain and processed at multiple levels there. In contrast, top-down processing is based on the experiences of an individual and requires some learned associations and knowledge to occur. Bottom-up processing does not require these ingredients, as it happens (more or less) as the stimuli are being experienced.

The theory of top-down processing was proposed in 1970 by psychologist Richard Gregory. Gregory argued that much of the information received by our senses lost by the time you reach the brain, and therefore we had to construct our own perception of reality based off of our previous past experiences. Gregory also argued that the creation of incorrect hypotheses based off of sensory information will create perceptual errors, such as visual illusions.

Comparing And Contrasting Bottom-Up Vs. Top-Down

Let’s look at some real-world examples to get an intuition for how bottom down processing versus top-down processing works. Let’s assume that there was an obscured picture in front of you, and you can only perceive part of the image, which shows a long fuzzy shape. If you were to see the image in its entirety, using bottom-up processing, you would be able to perceive it as a cat and the portion of the image you had seen before as the cat’s tail.

Now let’s assume that there was an obscured number in front of you, but it is between the numbers 24 and 26. Using context clues, information about the environment around you, you can interpret that the number is probably a 25, through the process of top-down processing. Both top-down processing and bottom-up processing play an important role in how we interpret the world around us.

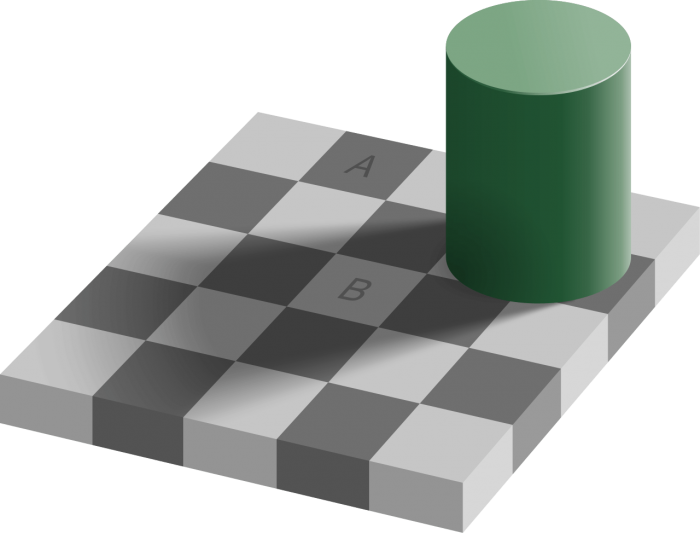

In this image Square A and Square B are the exact same shade of grey, but the color of the surrounding squares tricks the brain into thinking they are different. Photo: By Original: Edward H. Adelson, vectorized by Pbroks13. – Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=75000950

Yet these systems can occasionally be tricked by certain patterns of stimuli. Many examples of visual illusions exploit our brain’s top-down processing mode. Perhaps you’ve seen one of the visual illusions where a square is placed amongst squares of other colors, and the square looks darker. Let’s say that it is a red square against a gray background. Off to one side will be another red square standing on its own. The red square against the gray background looks darker in comparison to the square standing on its own. However, this is because of the squares surrounding it. If you were to compare the two red squares together they would be the exact same color. This is because our perception of the square is being influenced by the environment around it.

There is a condition known as face blindness or prosopagnosia. Faces, even the individuals own face. Other aspects of visual interpretation and processing are unaffected, and people with the condition typically have no problem recognizing other objects. People with the condition are unable to recognize faces, but they’re still able to receive them. This means that it’s a lack of top-down processing that makes the individual unable to associate their perception with their stored knowledge. The individual’s bottom-up reception remains perfectly functional.

Bottom-Up Processing In Other Fields

The fields of psychology and neuroscience are not the only fields that use the term bottom-up processing. The term is also applied to software development and computer science. In this context, a bottom-up software development plan emphasizes programming and testing early in the creation of a system, as early as possible once the groundwork for a system has been laid out. This is in contrast to system development approaches which emphasize a complete understanding of the system before programming on any individual system module begins. Most approaches to software design combine aspects of both bottom-up and top-down development schemes.

Within programming, a bottom-up programming approach involves creating the individual elements of the program with great attention to detail. These various elements are then linked together to form larger subsystems, and so on, until a complete application has been created. The strategy sometimes referred to as a seed model, and object-oriented programming or OOP is one example of such development strategies. Top-down programming works the other way around, by creating complex pieces and then dividing them into small pieces later on.

In the management and organization areas, top-down processing refers to a system that has an executive level decision maker disseminate their decisions to people lower in the hierarchy, who disseminate their decisions to those lower on the latter, and someone. Meanwhile, a bottom-up system is characterized as a grassroots approach, with decisions being made by groups of individuals working together.

In the field of nanotechnology, bottom-up approaches function by manipulating objects at the molecular level and combining them into more complex structures as one moves on. Meanwhile, top-down approaches use large externally controlled devices in order to assemble nanoscale products.