Those who take moderate and nuanced positions often lament the rise in extreme rhetoric and polarized debates. While social media is often identified as the culprit behind this trend, polarizing language is also felt to be increasing in mainstream and online media.

In our most recently published paper (Al-Mosaiwi & Johnstone, 2018), we use automated text-analysis to identify linguistic markers for extreme and moderate language. We then employ machine learning to train a classifier (computer algorithm) to recognize extreme and moderate language. The predictive accuracy of the classifier is then tested by running it on new extracts of moderate and extreme natural language texts. Based on the linguistic patterns it has been trained to recognize, it will label each text extract as either “extreme” or “moderate.”

How do we determine what is extreme and what is moderate language? Here we use the same methodology employed in sentiment analysis. In traditional sentiment analysis, an objective rating scale is used to determine if a review is positive or negative. For example, on the website IMDB, if a film review awards a film more than 5 stars (>5/10), it is deemed a positive review; if it awards fewer than 5 stars (<5/10), then it is a negative review. In sentiment analysis, machines learn the linguistic patterns associated with positive and negative reviews, as determined by this objective rating scale.

The use of objective rating scales to determine extreme and moderate responses is well established in psychology. On questionnaires with 7-point Likert type scales, a response that selects the absolute end-point of the scale (i.e. 1 or 7) is considered an extreme response. Conversely, a response that selects between 2-6 is considered a moderate response.

Extreme responding on these Likert scales have been linked to psychopathology (mental health disorders), personality disorders, lower IQ, lower life success outcomes, and certain cultures. On that last point, there have been studies that have linked extreme responding on Likert scales to Black (e.g. Bachman, O’Malley, & Freedman-Doan, 2010) and Latino (e.g. Marin, Gamba, & Marin, 1992) cultures, while moderate responding has been linked to Asian cultures (Chen, Lee, & Stevenson, 1995).

In our study, we use responses on an objective rating scale to determine extreme or moderate classifications and study the linguistic patterns associated with them. Our data sources are IMDB, TripAdvisor, and Amazon reviews, as they all have natural language written reviews accompanied by objective ratings.

We wanted to identify features that would generalize beyond these three websites. Therefore, we restricted our feature selection to “functional” words. Nouns, verbs, and adjectives are “content” words which are highly dependent on the subject of the text. Conversely, articles, prepositions, and determiners are examples of functional words, which relate to the grammatical structure of a text and have little connection to the content. By exclusively focusing on functional words, we can generalize our findings beyond the websites in the study.

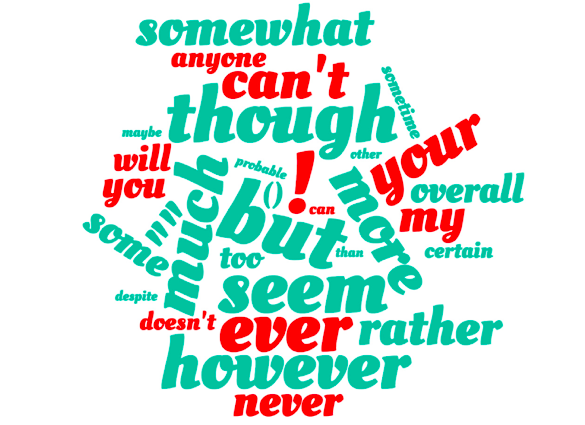

We identified 11 unigrams (words or symbols) which are markers specific to extreme natural language and 20 markers which are specific to moderate natural language (see fig. 1). Of the markers for extreme reviews, “ever,” “never,” and “anyone” all denote absolutes; “my,” “you,” and “your” are determiners/possessive pronouns; there are two negations — “can’t” and “doesn’t” — which are used as categorical imperatives; and exclamation marks are intensifiers which signal extreme language. For the moderate review linguistic markers,”but,” “though,” “despite,” “other,” and “however” all introduce nuance; “much” and “more” both refer to large amounts; “rather,” “somewhat,” “sometimes,” and “some” all specify a moderate extent; “seem,” “maybe,” and “probable” have a vague and noncommittal property, while “overall” seeks to combine separate components; finally, the word “certain” relates to specifying subcomponents.

Our classification algorithm had a prediction accuracy of over 90% when tested on new data and trained to recognize extreme and moderate patterns in natural language, based on the above markers.

Measuring extremism and moderation using natural language is more informative, flexible, and ecologically valid than the previous method, which was reliant on Likert-type scales. Moreover, at a time when there is an ever-growing proliferation of online natural language data, the classifier could be continually improved with greater training and can be employed to identify extreme and moderate language in a variety of online sources.

These findings are described in the article entitled Linguistic markers of moderate and absolute natural language, recently published in the journal Personality and Individual Differences. This work was conducted by Mohammed Al-Mosaiwi and Tom Johnstone from the University of Reading.